A Brief History of Internet of Things (IoT)

Did you know that the first ever IoT device was created so that soda runs would become easier?

When the first IoT device was invented, it wasn’t for a significant project or by a tech giant. A bunch of students at Carnegie Mellon University got tired of making soda runs to a Coca-Cola vending machine and finding it empty. As a result, they invented the first smart vending machine, which tracked its contents.

The History of IoT, however, does not begin from this incident. In order to track the history accurately, we need to dive into the entire timeline of Internet of Things.

What to expect: We explain how IoT came into being by highlighting the key events in this century that led to Internet of Things as we know it today. Before diving into the history, if you’d like to know more about the technology itself, we have created a handy guide for IoT enthusiasts.

Internet of Things—a comprehensive timeline

The internet itself is a significant part of IoT, and the invention of connectedness itself is the first milestone in the history of IoT.

The birth of the Internet and IoT

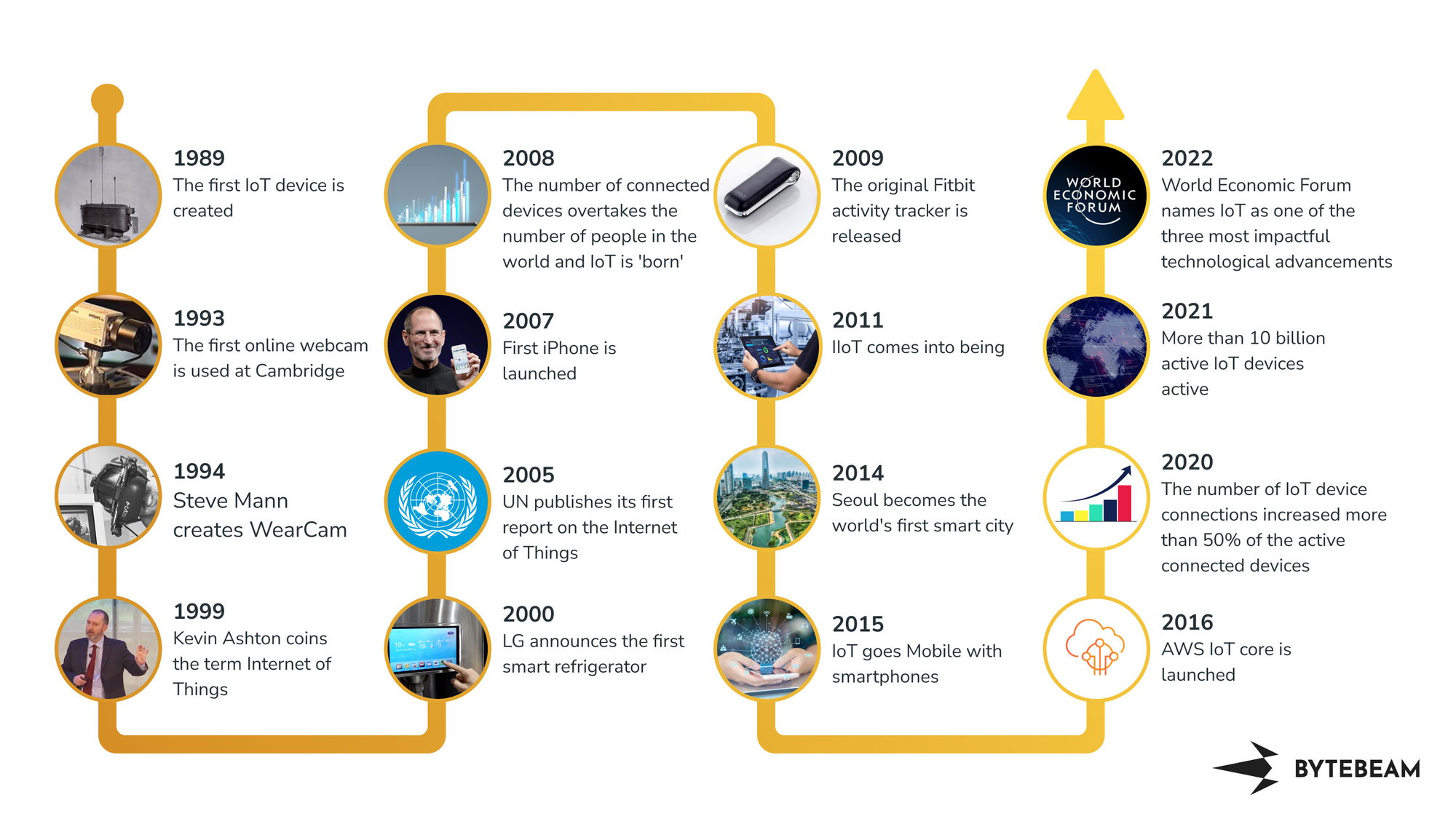

In 1962, J.C.R. Licklider, the head of the Defense Advanced Research Projects Agency (DARPA), envisioned a galactic network of an interconnected set of computers. His concept later evolved into the Advanced Research Projects Agency Network (ARPANET) in 1969. By 1980, ARPANET was commercialized for public use, and thus the internet was born.

In 1989, David Nichols and his colleagues at MIT invented the first IoT device, and this was shortly followed by John Romkey and Simon Hackett creating the Internet Toaster in 1991. The Internet toaster was a big milestone, as Romkey and Hackett successfully connected a toaster to the internet and managed to turn it on and off remotely.

We can conclusively say that the early breakthroughs in Internet of Things were a result of researchers having fun with technology (and occasionally getting frustrated at the lack of beverages).

Don’t believe us?

Dr. Quentin Stafford-Fraser and his colleagues at the University of Cambridge invented the first online webcam for the same reason the smart vending machine was invented. When the researchers at Cambridge wanted coffee, they had to leave their workstations to go fetch it. But they would often find the coffee pot empty. Dr. Stafford-Fraser and his colleagues then installed a camera near the coffee pot which would click pictures of the pot 3 times a minute. This would allow everyone to check if the pot was empty and save them the frustration. This camera was connected to the internet in 1993 and became the first online webcam.

A year later, Steve Mann created WearCam, which turned out to be the first major milestone for wearable technology.

IoT is christened

By now, devices being connected to the internet was becoming a thing (pun intended). But it was still a result of odd scattered experiments as opposed to a single technology. This changed when Kevin Ashton, the co-founder of the Auto-ID Labs at MIT, coined the term ‘Internet of Things’ in 1999. Ashton, who believed that RFID is a prerequisite for IoT, was a big believer in the potential of the technology.

He once said, “....today's information technology is so dependent on data originated by people that our computers know more about ideas than things. If we had computers that knew everything there was to know about things—using data they gathered without any help from us—we would be able to track and count everything, and greatly reduce waste, loss and cost….”

IoT goes household

At the turn of the millennium, smart technology began blooming. Internet of Things made its way to homes from research labs. In 2000, LG announced the first ever smart refrigerator, which paved the way for the commercialization of IoT.

In less than a decade of coining the term, Internet of Things had become a phenomenon. The United Nations published its first report on the Internet of Things in 2005, deeming it one of the technologies that had the most global potential.

The UN report’s predictions were pretty apt, as just two years later, Apple announced the first iPhone in 2007. Although the first ever smartphone would be the Simon Personal Communicator, created by IBM in 1994, it was the iPhone that popularized smartphones globally, even though the first iPhone only allowed users to browse the internet.

By 2008, the number of connected devices overtook the number of people in the world. This was the time that IoT was truly born, as the number of connected devices was officially at a stage where any data across the world could be collected if need be.

The 2000s was an exciting decade for IoT as several milestones were achieved in a short period. As the technology and the utility rapidly evolved, IoT could finally be a part of everyday life. In 2009, Fitbit released its original activity tracker. This was the first wearable activity tracker that paved the way for modern smartwatches.

As we enter the 2010s, IoT milestones started getting more technical and specific. The underlying technology had already evolved enough that the sub-segments could start evolving on their own.

IoT becomes accessible

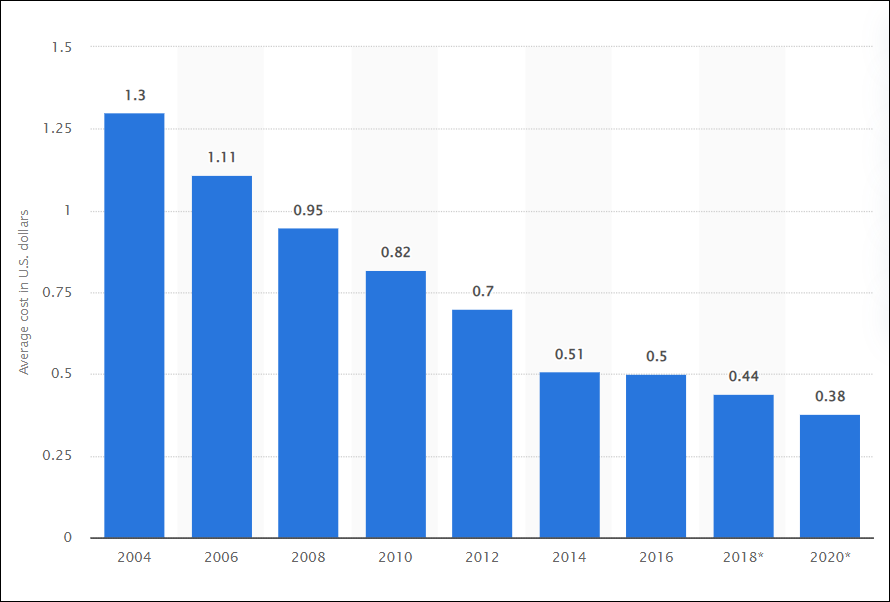

Now Industrial IoT was always a thing alongside IoT, as it was a specific application of the technology, but in 2010, the sensor prices dropped enough so that they could be used in a widespread manner. This enabled IIoT more than any other factor, as IIoT required a large-scale setup of devices to run. The following figure shows us the Average costs of industrial Internet of Things (IoT) sensors from 2004 to 2020, according to Statista.

Industrial applications weren’t the only segment that received a boost from better and cheaper infrastructure. Smart cities suddenly became feasible with the widespread availability of IoT devices—and as a result, Seoul became the world’s first smart city in 2014. Shortly after that Singapore, Amsterdam, and New York followed suit.

By this point in time, sensors were accessible enough to be used in every device, and wearable technology took advantage of this. IoT truly went mobile in 2015, as smartphones, smartwatches, health monitors, and GPS trackers became a household scene.

IoT today and tomorrow

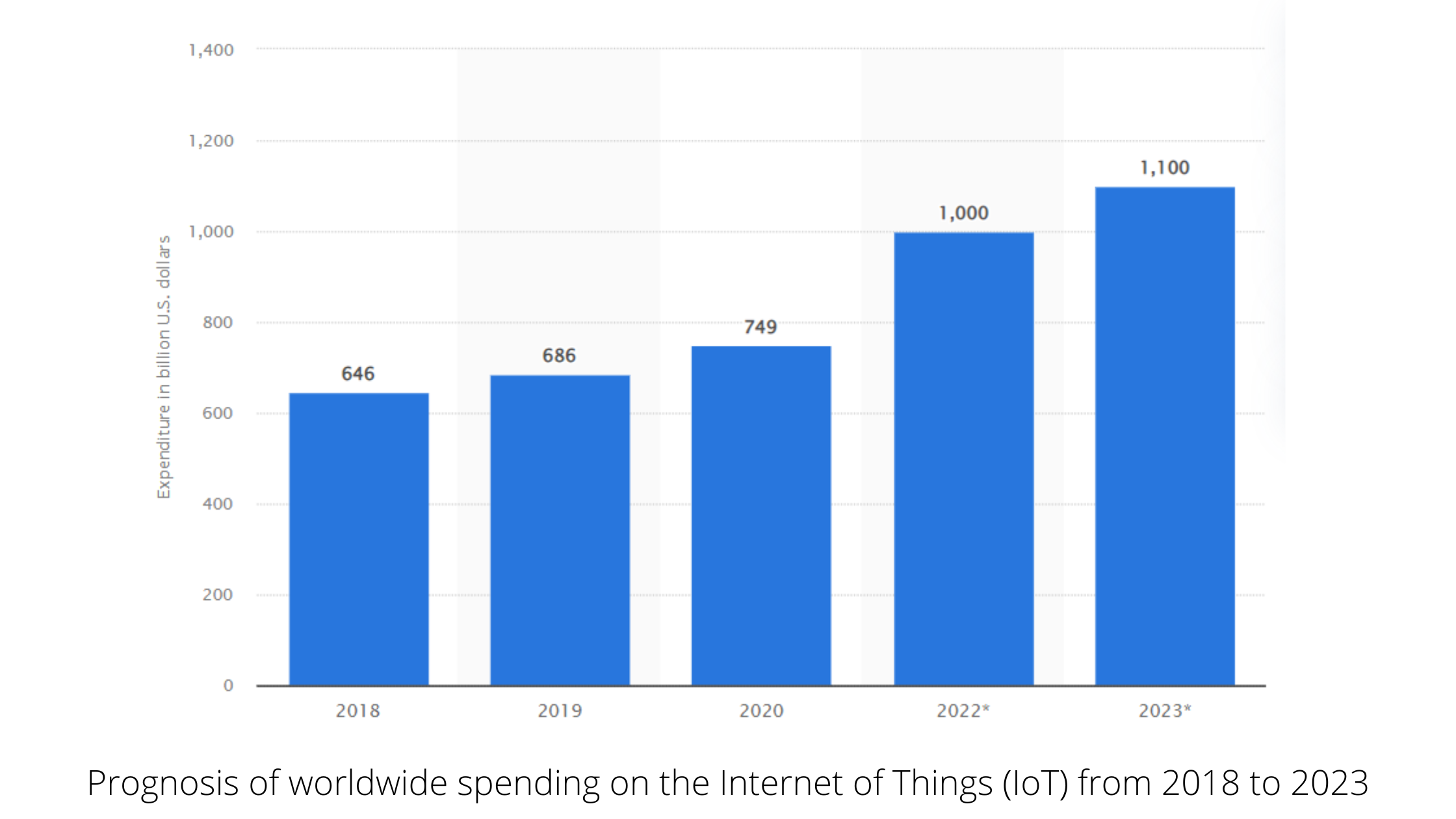

As global spending on IoT increased, businesses started investing in the technology. The following figure shows the spending rise in IoT.

As the technology became lucrative enough for several businesses to be interested, IoT platforms started cropping up. AWS launched IoT core in 2015 and rolled it out completely by 2016. This was closely followed by Azure IoT hub in 2016 and Google IoT core in 2017.

In the following years, several other IoT platforms were launched, which allowed businesses to simplify their IoT projects and expand into the IoT space more easily. As a direct result, in 2021, the number of connected devices surpassed the number of non-connected devices worldwide.

To take note of its growing influence, the World Economic Forum named IoT as one of the three most impactful technological advancements in 2022.

The history of IoT is full of fun experiments, complex evolution, and some major industry overhauls. There are several predictions for where Internet of Things will go from here, but that’s for another post. For now, we hope to have enlightened you with the journey that led to today’s IoT landscape.